Multi-queue

Introduction to Multi-Queue

By default, each network interface has one traffic queue that is handled by one CPU at a time. Because the SND (Secure Network distributer, the SecureXL and CoreXL dispatcher) runs on the CPUs handling the traffic queues, you cannot use more CPUs for acceleration than the number of interfaces handling traffic.

Multi-queue lets you configure more than one traffic queue for each network interface. This means more than one CPU can be used for acceleration.

Multi-queue Requirements and Limitations

- Multi-queue is not supported on single core computers.

- Network interfaces must support multi-queue

- The number of queues is limited by the number of CPUs and the type of interface driver:

Driver type

|

Maximum recommended number of rx queues

|

Igb

|

4

|

Ixgbe

|

16

|

Deciding if Multi-queue is needed

This section will help you decide if you can benefit from configuring Multi-queue. We recommend that you do these steps before configuring Multi-queue:

- Make sure that SecureXL is enabled

- Examine the CPU roles allocation

- Examine CPU Utilization

- Decide if more CPUs can be allocated to the SND

- Make sure that network interfaces support Multi-queue

Making sure that SecureXL is enabled

- On the Security Gateway, run:

fwaccel stat - Examine the Accelerator Status value:

[Expert@gw-30123d:0]# fwaccel stat

Accelerator Status : on

Accept Templates : enabled

Drop Templates : disabled

NAT Templates : disabled by user

Accelerator Features : Accounting, NAT, Cryptography, Routing,

HasClock, Templates, Synchronous, IdleDetection,

Sequencing, TcpStateDetect, AutoExpire,

DelayedNotif, TcpStateDetectV2, CPLS, WireMode,

DropTemplates, NatTemplates, Streaming,

MultiFW, AntiSpoofing, DoS Defender, ViolationStats,

Nac, AsychronicNotif, ERDOS

Cryptography Features : Tunnel, UDPEncapsulation, MD5, SHA1, NULL,

3DES, DES, CAST, CAST-40, AES-128, AES-256,

ESP, LinkSelection, DynamicVPN, NatTraversal,

EncRouting, AES-XCBC, SHA256

|

SecureXL is enabled if the value of this field is: on.

|

Note -

- Multi-queue is relevant only if SecureXL is enabled.

- Drop templates still show in the command output even though support for drop templates stopped in R75.40

|

Examining the CPU roles allocation

To see the CPU roles allocation, run: fw ctl affinity –l

This command shows the CPU affinity of the interfaces, which assigns SND CPUs. It also shows the CoreXL firewall instances CPU affinity. For example, if you run the command on a security gateway:

[Expert@gw-30123d:0]# fw ctl affinity -l

Mgmt: CPU 0

eth1-05: CPU 0

eth1-06: CPU 1

fw_0: CPU 5

fw_1: CPU 4

fw_2: CPU 3

fw_3: CPU 2

|

In this example:

- The SND is running on CPU 0 and CPU1

- CoreXL firewall instances are running on CPUs 2-5

If you run the command on a VSX gateway:

[Expert@gw-30123d:0]# fw ctl affinity -l

Mgmt: CPU 0

eth1-05: CPU 0

eth1-06: CPU 1

VS_0 fwk: CPU 2 3 4 5

VS_1 fwk: CPU 2 3 4 5

|

In this example:

- The SND is running on CPU 0-1

- CoreXL firewall instances (part of fwk processes) of all the Virtual Systems are running on CPUs 2-5.

Examining CPU Utilization

- On the Security Gateway, run:

top. - Press 1 to toggle the SMP view.

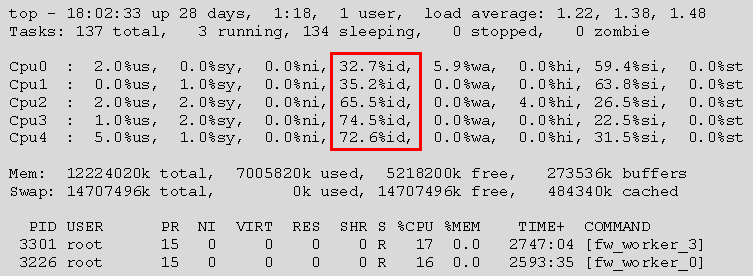

This shows the usage and idle percentage for each CPU. For example:

In this example:

- SND CPUs (CPU0 and CPU1) are approximately 30% idle

- CoreXL firewall instances CPUs are approximately 70% idle

Deciding if more CPU can be allocated to the SND

If you have more network interfaces handling traffic than CPUs assigned to the SND (as shown in the output of the fw ctl affinity –l command), you can allocate more CPUs for SND.

For example, if you have the following network interfaces:

- eth1-04 – connected to an internal network

- eth1-05 – connected to an internal network

- eth1-06 – connected to the DMZ

- eth1-07 – connected to the external network

And running fw ctl affinity -l shows this IRQ affinity:

[Expert@gw-30123d:0]# fw ctl affinity -l

Mgmt: CPU 0

eth1-04: CPU 1

eth1-05: CPU 0

eth1-06: CPU 1

eth1-07: CPU 0

fw_0: CPU 5

fw_1: CPU 4

fw_2: CPU 3

fw_3: CPU 2

|

You can change the interfaces IRQ affinity to use more CPUs for the SNDs.

Making sure that the network interfaces support Multi-queue

Multi-queue is supported only on network cards that use igb (1Gb) or ixgbe (10Gb) drivers. Before upgrading these drivers, make sure that the latest version supports multi-queue.

Gateway type

|

Network card

|

Security Appliance

|

Multi-queue is supported on these expansion cards for 4000, 12000, and 21000 appliances:

- CPAP-ACC-4-1C

- CPAP-ACC-4-1F

- CPAP-ACC-8-1C

- CPAP-ACC-2-10F

- CPAP-ACC-4-10F

|

IP appliance

|

The XMC 1Gb card is supported on:

|

Open server

|

Network cards that use igb (1Gb) or ixgbe (10Gb) drivers

|

- To view which driver an interface is using, run:

ethtool -i <interface name>. - When installing a new interface that uses the igb or ixgbe driver, run:

cpmq reconfigure and reboot.

Recommendation

We recommend configuring multi-queue when:

- CPU load for SND is high (idle is less than 20%) and

- CPU load for CoreXL firewall instances are low (idle is greater than 50%)

- You cannot assign more CPUs to the SND by changing interface IRQ affinity

Basic Multi-queue Configuration

The cpmq utility is used to view or change the current multi-queue configuration.

Configuring Multi-queue

The cpmq set command lets you to configure Multi-queue on supported interfaces.

To configure Multi-queue:

Querying the current Multi-queue configuration

The cpmq get command shows the Multi-queue status of supported interfaces.

To see the Multi-queue configuration:

Run: cpmq get [-a]

The -a option shows the Multi-queue configuration for all supported interfaces (both active and inactive). For example:

[Expert@gw-30123d:0]# cpmq get -a

Active igb interfaces:

eth1-05 [On]

eth1-06 [Off]

eth1-01 [Off]

eth1-03 [Off]

eth1-04 [On]

Non active igb interfaces:

eth1-02 [Off]

|

Status messages

Status

|

Meaning

|

On

|

Multi-queue is enabled on the interface

|

Off

|

Multi-queue is disabled on the interface

|

Pending On

|

Multi-queue currently disabled. Multi-queue will be enabled on this interface only after rebooting the gateway

|

Pending Off

|

Multi-queue enabled. Multi-queue will be disabled on this interface only after rebooting the gateway

|

In this example:

Running the command without the -a option shows the active interfaces only.

Multi-queue Administration

There are two main roles for CPUs applicable to SecureXL and CoreXL:

For best performance, the same CPU should not work in both roles. During installation, a default CPU role configuration is set. For example, on a twelve core computer, the two CPUs with the lowest CPU ID are set as SNDs and the ten CPUs with the highest CPU IDs are set as CoreXL firewall instances.

Without Multi–queue, the number of CPUs allocated to the SND is limited by the number of network interfaces handling the traffic. Since each interface has one traffic queue, each queue can be handled by only one CPU at a time. This means that the SND can use only one CPU at a time per network interface.

When most of the traffic is accelerated, the CPU load for SND can be very high while the CPU load for CoreXL firewall instances can be very low. This is an inefficient utilization of CPU capacity.

Multi-queue lets you configure more than one traffic queue for each supported network interface, so that more than one SND CPU can handle the traffic of a single network interface at a time. This balances the load efficiently between SND CPUs and CoreXL firewall instances CPUs.

Glossary of Terms

Term

|

Description

|

SND

|

Secure Network Distributor. A CPU that runs SecureXL and CoreXL

|

rx queue

|

Receive packet queue

|

tx queue

|

Transmit packet queue

|

IRQ affinity

|

Binding an IRQ to a specific CPU or CPUs.

|

Advanced Multi-queue settings

Advanced multi-queue settings include:

- Controlling the number of queues

- IRQ Affinity

- Viewing CPU Utilization

Controlling the number of queues

Controlling the number of queues depends on the driver type:

Driver type

|

Queues

|

igxbe

|

When configuring Multi-queue for an ixgbe interface, an RxTx queue is created per CPU. You can control the number of active rx queues (all tx queues are active).

|

igb

|

When configuring Multi-queue for an igb interface, the number of tx and rx queues is calculated by the number of active rx queues.

|

To control the number of active rx queues:

Run: cpmq set rx_num <igb/ixgbe> <number of active rx queues>

This command overrides the default value.

To view the number of active rx queues:

Run: cpmq get rx_num <igb/ixgbe>

To return to the recommended number of rx queues:

On a Security Gateway, the number of active queues changes automatically when you change the number of CoreXL firewall instances (using cpconfig). This number of active queues does not change if you configure the number of rx queues manually.

Run: cpmq set rx_num <igb/ixgbe> default

IRQ Affinity

The IRQ affinity of the queues is set automatically when the operating system boots, as shown (rx_num set to 3):

rxtx-0 -> CPU 0

rxtx-1 -> CPU 1

rxtx-2 -> CPU 2

and so on. This is also true in cases where rx and tx queues are assigned with a separated IRQ:

rx-0 -> CPU 0

tx-0 -> CPU 0

rx-1 -> CPU 1

tx-1 -> CPU 1

and so on.

- You cannot use the

sim affinity or the fw ctl affinity commands to change and query the IRQ affinity for Multi-queue interfaces. - You can reset the affinity of Multi-queue IRQs by running:

cpmq set affinity - You can view the affinity of Multi-queue IRQs by running:

cpmq get -v

|

Important - Do not change the IRQ affinity of queues manually. Changing the IRQ affinity of the queues manually can affect performance.

|

Viewing CPU Utilization

- Find the CPUs assigned to multi-queue IRQs by running:

cpmq get -v. For example:

[Expert@gw-30123d:0]# cpmq get -v

Active igb interfaces:

eth1-05 [On]

eth1-06 [Off]

eth1-01 [Off]

eth1-03 [Off]

eth1-04 [On]

multi-queue affinity for igb interfaces:

eth1-05:

irq | cpu | queue

-----------------------------------------------------

178 0 TxRx-0

186 1 TxRx-1

eth1-04:

irq | cpu | queue

-----------------------------------------------------

123 0 TxRx-0

131 1 TxRx-1

|

In this example:

- Multi-queue is enabled on two igb interfaces (eth1-05 and eth1-04)

- The number of active rx queues is configured to 2 (for igb, the number of queues is calculated by the number of active rx queues).

- The IRQs for both interfaces are assigned to CPUs 0-1.

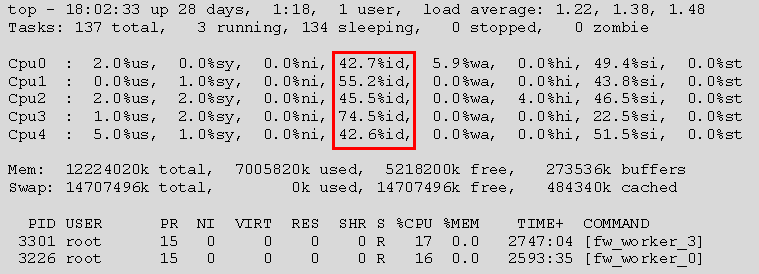

- Run:

top - Press 1 to toggle to the SMP view.

In the above example, CPU utilization of Multi-queue CPUs is approximately 50%, as CPU0 and CPU1 are handling the queues (as shown in step 1).

The Sim Affinity Command

Sim affinity

|

|

Description

|

A process used to automatically assign or cancel interface - CPU affinity.

|

Syntax

|

sim affinity

|

Parameters

|

Parameter

|

Description

|

-1

|

Lists affinity settings

|

-s

|

Sets static or manual affinity for interfaces without multi-queue (and cancels the -a parameter).

|

-a

|

Automatically sets affinity for all interfaces every second.

|

-h

|

Displays help

|

|

|

Example

|

sim affinity -a

|

Comments

|

By default, the sim affinity -a process runs, assigning affinity to both multi-queue and none multi-queue enabled interfaces. Before R76, multi-queue interfaces were ignored by the sim affinity command.

If the sim affinity -a process has been canceled by running sim affinity -s, use cpqm set affinity to reset the affinity of the multi-queue interface.

|

Overriding rx queue and interface limitations

- The number of rx queues is limited by the number of CPUs and the type of interface driver:

Driver type

|

Maximum recommended number of rx queues

|

Igb

|

4

|

Ixgbe

|

16

|

To add more rx queues, run:

cpmq rx_num <igb/ixgbe> <number of active rx queues> -f

Special Scenarios and Configurations

- In Security Gateway mode: Changing the number of CoreXL firewall instances when Multi-queue is enabled on some or all interfaces

For best performance, the default number of active rx queues is calculated by:

Number of active rx queues = number of CPUs – number of CoreXL firewall instances

This configuration is set automatically when configuring Multi-queue. When changing the number of instances, the number of active rx queues will change automatically if it was not set manually.

- In VSX mode: changing the number of CPUs that the

fwk processes are assigned to - The default number of active rx queues is calculated by:

Number of active rx queues = the lowest CPU ID that an fwk process is assigned to

For example:

[Expert@gw-30123d:0]# fw ctl affinity -l

Mgmt: CPU 0

eth1-05: CPU 0

eth1-06: CPU 1

VS_0 fwk: CPU 2 3 4 5

VS_1 fwk: CPU 2 3 4 5

|

In this example

- The number of active rx queues is set to 2.

- This configuration is set automatically when configuring Multi-queue.

- It will not automatically update when changing the affinity of the Virtual Systems. When changing the affinity of the Virtual Systems, make sure to follow the instructions in Advanced Multi-queue settings.

The effects of changing the status of a multi-queue enabled interface

- Changing the status to DOWN

The Multi-queue configuration is saved when you change the status of an interface to down.

Since the number of interfaces with Multi-queue enabled is limited to two, you may need to disable Multi-queue on an interface after changing its status to down to enable Multi-queue on other interfaces.

The cpmq set command lets you disable Multi-queue on non-active interfaces.

- Changing the status to UP

You must reset the IRQ affinity for multi-queue interfaces if

- Multi-queue was enabled on the interface

- You changed the status of the interface to down

- You rebooted the gateway

- You changed the interface status to up.

This problem does not occur if you are running automatic sim affinity (sim affinity -a). Automatic sim affinity runs by default, and has to be manually canceled using the sim affinity -s command.

To set the static affinity of Multi-queue interfaces again, run: cpmq set affinity.

Adding a network interface

- When adding a network interface card to a gateway that uses igb or ixgbe drivers, the Multi-queue configuration can change due to interface indexing. If you add a network interface card to a gateway that uses igb or ixgbe drivers make sure to run Multi-queue configuration again or run:

cpmq reconfigure. - If a reconfiguration change is required, you will be prompted to reboot the computer.

Changing the affinity of CoreXL firewall instances

Troubleshooting

- After reboot, the wrong interfaces are configured for Multi-queue

This can happen after changing the physical interfaces on the gateway. To solve this issue:

- Run:

cpmq reconfigure - Reboot.

Or configure Multi-queue again.

- When changing the status of interfaces, all the interface IRQs are assigned to CPU 0 or to all of the CPUs

This can happen when an interface status is changed to UP after the automatic affinity procedure runs (the affinity procedure runs automatically during boot).

To solve this issue, run: cpmq set affinity

This problem does not occur if you are running automatic sim affinity (sim affinity -s). Automatic sim affinity runs by default, and has to be manually canceled using the sim affinity -s command.

- In VSX mode, an fwk process runs on the same CPU as some of the interface queues

This can happen when the affinity of the Virtual System was manually changed but Multi-queue was not reconfigured accordingly.

To solve this issue, configure the number of active rx queues manually or run: cpmq reconfigure and reboot.

- In Security Gateway mode – after changing the number of instances Multi-queue is disabled on all interfaces

When changing the number of CoreXL firewall instances, the number of active rx queues automatically changes according to this rule (if not configured manually):

Active rx queues = Number of CPUs – number of CoreXL firewall instances

If the number of instances is equal to the number of CPUs, or if the difference between the number of CPUs and the number of CoreXL firewall instances is 1, multi-queue will be disabled. To solve this issue, configure the number of active rx queues manually by running:

cpmq set rx_num <igb/ixgbe> <value>

|

|