Troubleshooting

|

Issue |

Solution |

|||

|---|---|---|---|---|

|

Enable debugging on each Cross AZ Cluster |

From the Cross AZ Cluster Member (either one), run in Expert mode:

Debug output is written to: $FWDIR/log/aws_had.elg To disable debugging, you MUST run the following command on each Cluster Member

|

|||

|

Test the environment |

To test the Cross AZ Cluster environment, run in Expert mode:

This runs tests that verify:

|

|||

|

Extract Cross AZ Cluster information |

From the Cross AZ Cluster Member, run in Expert mode:

|

|||

|

Extract Cross AZ Cluster state |

From the Cross AZ Cluster Member, run in Expert mode:

Example output: Cluster Mode: High Availability (Active Up) with IGMP Membership Number Unique Address Assigned Load State 1 (local) 10.0.1.20 0% Active 2 10.0.1.30 00% Standby Output of cphaprob stat command on both must show the same information (except the "(local)" string). |

|||

|

Permissions required for the Cross AZ Cluster Members IAM role |

Required Permissions

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"ec2:AssignPrivateIpAddresses",

"ec2:AssociateAddress",

"ec2:CreateRoute",

"ec2:DescribeNetworkInterfaces",

"ec2:DescribeRouteTables",

"ec2:ReplaceRoute"

],

"Resource": "*",

"Effect": "Allow"

}

]

}

If the IAM roles, are not configured correctly, they prevent communication from the Cross AZ Cluster Members to AWS to make networking changes if a Cross AZ Cluster Member failure occurs. |

|||

|

During failover, the AWS route tables do not change their route from the failed member to standby active member |

|

|||

|

Issues with Cross AZ Cluster behaviour |

Check that the script responsible for communication with AWS is running on each Cross AZ Cluster Member. On the Cross AZ Cluster Member (either one), run in Expert mode:

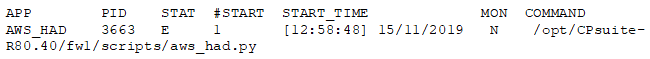

The output should have a line similar to:

Notes:

|

|||

|

Cross AZ Cluster with Multiple Elastic IP addresses: Not all Elastic IP addresses move to a new Cluster member after failover. |

|

|||

|

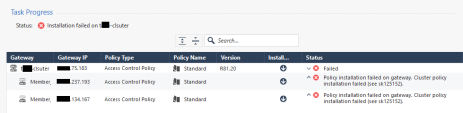

Policy installation on Cluster fails with error: Policy installation failed on gateway. Cluster policy installation failed (see sk125152). |

For example: This error can happen when the Cluster is not configured exactly as described in this guide.

|